Software Quality and Testing Summary

Table of Contents

- Table of Contents

- Terminology

- Principles of software testing

- Test Design

- JUnit

- Testing techniques

- Boundary testing

- Variables:

- Equivalence partitioning and boundary analysis:

- Structural testing

- Strategy:

- Test cases:

- Structural testing

- Design by contracts

- Test Oracles

- Pre- and post-conditions

- Invariant

- Design by contracts

- Testing for Liskov Substitution Principle (LSP) Compliance

- Property-Based testing

- Testing Pyramid

- Test doubles

- Design for testability

- Web testing

- Javascript Unit testing

- Javascript Unit test without framework

- Javascript UI test without framework

- JavaScript unit testing (with React and Jest)

- Tests for the UI with Jest

- Jest mocks

- Snapshot testing

- End-to-end testing

- Behaviour-driven design

- Usability and accessibility testing

- Load and performance testing

- Automated visual regression testing (screenshot testing)

- SQL testing

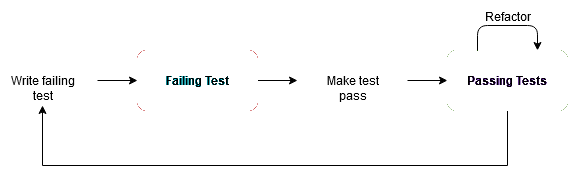

- Test Driven Development

- Test code quality and engineering

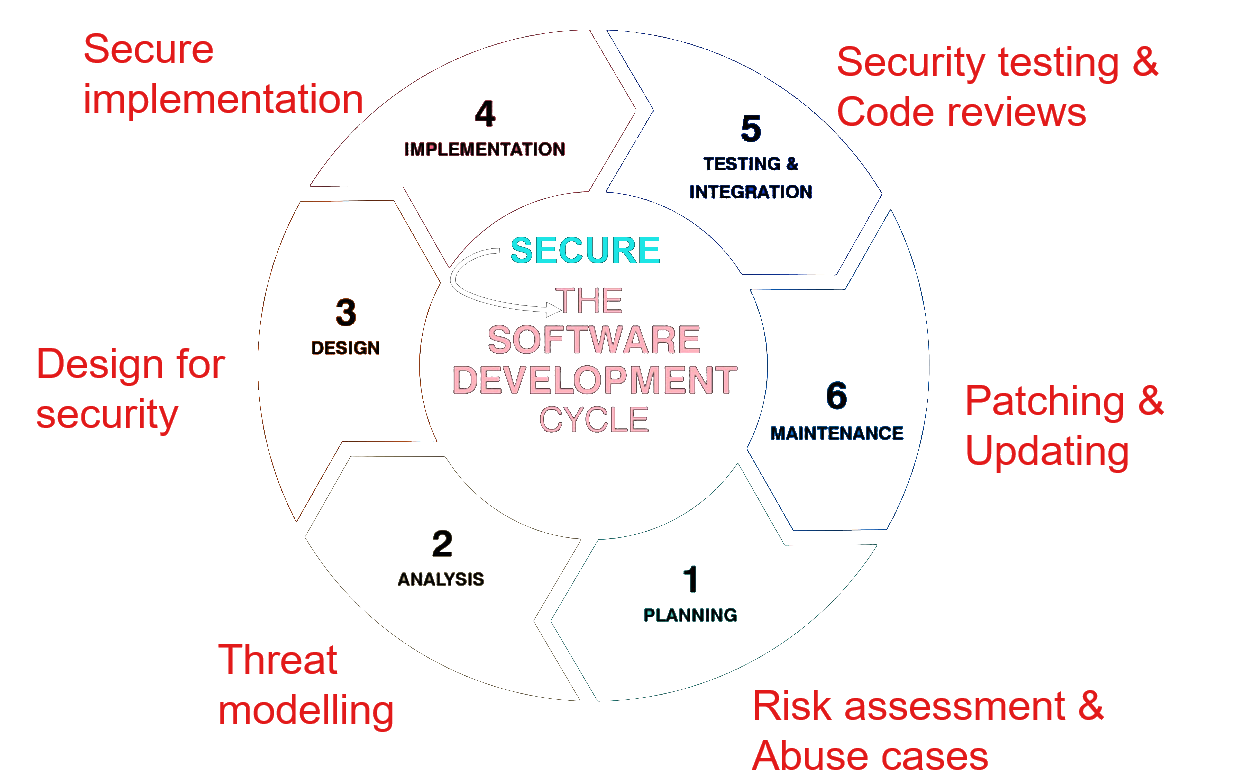

- Security Testing

- Intelligent testing

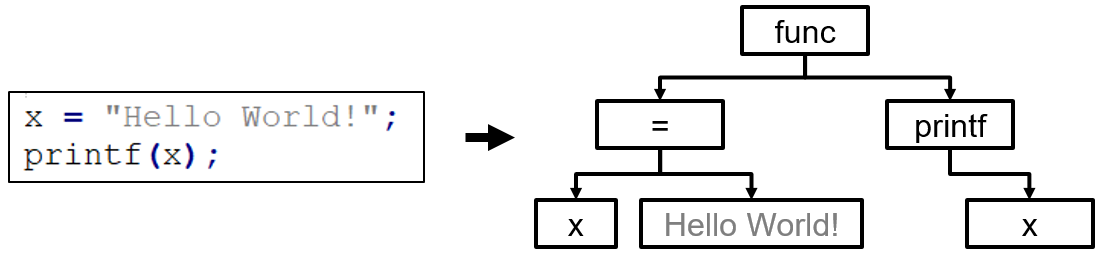

- Mutation Testing

- Fuzz testing

- Search-based software testing

Terminology

- Failure: Manifested inability of a system to performa required function. (When a system stops working as expected)

- Defect, fault, bug: The cause of the failure in terms of code/hardware implementation.

- Error (mistake): The cause of the bug (i.e. developer negligency)

- Defect, fault, bug: The cause of the failure in terms of code/hardware implementation.

- Testing: attempt to trigger failures

- Debugging: attempt to find the failure bug (defect, fault)

- Verification: Verify that the system behaves bug free

- Validation: Validate that the system delivers the busines value it should deliver (features)

Small checks

A common technique developers use (which we will try as much as possible to convince you not to do) is that they implement the program based on the requirements, and then perform “small checks” to make sure the program works as expected. However these checks are arbitrary and often not enough.

Principles of software testing

- Testing cannot show absence of bugs:

- Abscence of evidence is not evidence of absence.

- Testing specific scenarios only ensures those scenarios behave as expected.

- Exaustive testing is impossible:

- Possible scenarios increase exponentially as features are added. In a moderately large program, it’s impossible to test them all, but since bugs are not uniformly distributed we should focus on finding the bug-prone areas.

- Testing needs to start early

- Defects tend to be clustered

- Pesticide paradox yields test methods ineffective:

- Applying the same techniques over and over yields diminishing returns as you are leaving other types of bugs untested

- There is no one silver bullet testing strategy that can guarantee a complete bug-free software. Combining different testing strategies yields a better result in finding bugs.

- Testing is context-dependent

- A mobile app needs diffferent tests than a web app

- There is more to quality than absence of defects

- Besides software verification (bug free) we need software validation to ensure business value.

Test Design

- Decide which of the inintely many possible test cases to create

- Maximize information gain

- Minimize cost

- test strategy: Systematic approach to reach test cases

- targets specific types of faults until a given adequacy criterion is achieved

- Test design begins at the start of the project

Test levels

Have different levels of granularity:

- Unit testing

- Integration testing

- System testing

- Manual

Test types

Different objectives:

- Functionality (old/new)

- Security

- Performance

- …

JUnit

The steps to create a JUnit class/test is often the following:

-

Create a Java class under the directory /src/test/java/roman/ (or whatever test directory your project structure uses). As a convention, the name of the test class is similar to the name of the class under test. For example, a class that tests the RomanNumeral class is often called RomanNumeralTest. In terms of package structure, the test class also inherits the same package as the class under test.

-

For each test case we devise for the program/class, we write a test method. A JUnit test method returns void and is annotated with @Test (an annotation that comes from JUnit 5’s org.junit.jupiter.api.Test). The name of the test method does not matter to JUnit, but it does matter to us. A best practice is to name the test after the case it tests.

-

The test method instantiates the class under test and invokes the method under test. The test method passes the previously defined input in the test case definition to the method/class. The test method then stores the result of the method call (e.g., in a variable).

-

The test method asserts that the actual output matches the expected output. The expected output was defined during the test case definition phase. To check the outcome with the expected value, we use assertions. An assertion checks whether a certain expectation is met; if not, it throws an AssertionError and thereby causes the test to fail. A couple of useful assertions are:

- Assertions.assertEquals(expected, actual): Compares whether the expected and actual values are equal. The test fails otherwise. Be sure to pass the expected value as the first argument, and the actual value (the value that comes from the program under test) as the second argument. Otherwise the fail message of the test will not make sense.

- Assertions.assertTrue(condition): Passes if the condition evaluates to true, fails otherwise.

- Assertions.assertFalse(condition): Passes if the condition evaluates to false, fails otherwise.

- More assertions and additional arguments can be found in JUnit’s documentation. To make easy use of the assertions and to import them all in one go, you can use import static org.junit.jupiter.api.Assertions.*;.

import static org.junit.jupiter.api.Assertions.*;

import org.junit.jupiter.api.Test;

public class RomanNumeralTest {

@Test

void convertSingleDigit() {

RomanNumeral roman = new RomanNumeral();

int result = roman.convert("C");

assertEquals(100, result);

}

@Test

void convertNumberWithDifferentDigits() {

RomanNumeral roman = new RomanNumeral();

int result = roman.convert("CCXVI");

assertEquals(216, result);

}

@Test

void convertNumberWithSubtractiveNotation() {

RomanNumeral roman = new RomanNumeral();

int result = roman.convert("XL");

assertEquals(40, result);

}

}

AAA Automated tests: Arrange, Act, Assert

- Arrange: Define the input values that will be passed onto the automated test

- Act: Pass the input values by means of one or more method calls

- Assert: Execute assert instructions

@Test

void convertSingleDigit() {

// Arrange: we define the input values

String romanToBeConverted = "C";

// Act: we invoke the method under test

int result = roman.convert(romanToBeConverted);

// Assert: we check whether the output matches the expected result

assertEquals(100, result);

}

@BeforeEach

- JUnit runs methods that are annotated with @BeforeEach before every test method to avoid code duplication.

import org.junit.jupiter.api.BeforeEach;

import org.junit.jupiter.api.Test;

import static org.junit.jupiter.api.Assertions.*;

class RomanNumeralTest {

private RomanNumeral roman;

@BeforeEach

void setup() {

roman = new RomanNumeral();

}

@Test

void convertSingleDigit() {

int result = roman.convert("C");

assertEquals(100, result);

}

@Test

void convertNumberWithDifferentDigits() {

int result = roman.convert("CCXVI");

assertEquals(216, result);

}

@Test

void convertNumberWithSubtractiveNotation() {

int result = roman.convert("XL");

assertEquals(40, result);

}

}

@ParameterizedTest

- We write a generic test method whose values are generated in runtime by the parameters of such method.

- To feed those values we define a source with

@MethodSource("generatorMethodName") private static Stream<Arguments> generator()returns aStream.of(arguments)that will be inserted as paramaters in the parameterized test method.- The arugments must have the same number of elements as the paramaterized method

- The parameterized method will be run each time for each stream of arguments sent

- To feed those values we define a source with

import org.junit.jupiter.params.ParameterizedTest;

import org.junit.jupiter.params.provider.Arguments;

import org.junit.jupiter.params.provider.MethodSource;

import java.util.stream.Stream;

import static org.junit.jupiter.api.Assertions.*;

class NumFinderTest {

// This one line instantiation in practice is run each time before each test

private final NumFinder n = new NumFinder();

@ParameterizedTest

@MethodSource("generator")

void getMinMax(int[] nums, int expectedMin, int expectedMax) {

n.find(nums);

assertEquals(expectedMax, n.getLargest());

assertEquals(expectedMin, n.getSmallest());

}

private static Stream<Arguments> generator() {

Arguments tc1 = Arguments.of(new int[]{27, 26, 25}, 25, 27);

Arguments tc2 = Arguments.of(new int[]{5, 2, 15, 27}, 2, 27);

return Stream.of(tc1, tc2);

}

}

@CsvSource

- The CsvSource expects list of strings, where each string represents the input and output values for one test case.

@ParameterizedTest(name = "small={0}, big={1}, total={2}, result={3}")

@CsvSource({

// The total is higher than the amount of small and big bars.

"1,1,5,0", "1,1,6,1", "1,1,7,-1", "1,1,8,-1",

// No need for small bars.

"4,0,10,-1", "4,1,10,-1", "5,2,10,0", "5,3,10,0",

// Need for big and small bars.

"0,3,17,-1", "1,3,17,-1", "2,3,17,2", "3,3,17,2",

"0,3,12,-1", "1,3,12,-1", "2,3,12,2", "3,3,12,2",

// Only small bars.

"4,2,3,3", "3,2,3,3", "2,2,3,-1", "1,2,3,-1"

})

void boundaries(int small, int big, int total, int expectedResult) {

int result = new ChocolateBars().calculate(small, big, total);

Assertions.assertEquals(expectedResult, result);

}

Testing techniques

Advantages of test automation

As in comparision to manually checking System.out.println…

- Less prone to human mistakes

- Faster than developers

- Efficient refactoring (you can change the code without having to change the tests)

Specification based testing (from requirements)

- These use the requirements of the program (often written as text; think of user stories and/or UML use cases) as input for testing.

- these techniques are also referred to as black box testing as they dont need you to know details such as in which software the program was developed or which data structures are used in the implementation

Partioning the input space

- Based on the requirements -> create paritions that represent each possible case -> create unit test

Equivalence partitioning

- The idea that inputs being equivalent to each other yield the same outcome (i.e. testing for multiples of 4 can take 4q with any q)

- Chosing 1 of the equivalent elements is more than enough

Category-Partition method (from method inputs)

- Systematic way of deriving test cases, based on the characteristics of the input parameters.

- reduces the number of tests to a practical number

- Identify the parameters, or the input for the program. For example, the parameters your classes and methods receive.

- Derive characteristics of each parameter. For example, an int year should be a positive integer number between 0 and infinite.

- Some of these characteristics can be found directly in the specification of the program.

- Others might not be found from specifications. For example, an input cannot be null if the method does not handle that well.

- Add constraints in order to minimise the test suite.

- Generate combinations of the input values. (Like a cartesian product)

- Exceptional cases can be just tested once and thus no need to combine them into the cartesian product (the constraints should be applied after the cartesian product).

Boundary testing

- Test that regard edge cases

- This boundaries regard the boundaries of test partitions

- we can find such boundaries by finding a pair of consecutive input values [p1,p2], where p1 belongs to partition A, and p2 belongs to partition B.

- However, in longer conditions, full of boundaries, the number of combinations might be too high, making it unfeasible for the developer to test them all.

On and Off points

- On-point: The on-point is the value that is exactly on the boundary. This is the value we see in the condition itself.

- Note that, depending on the condition, an on-point can be either an in- or an out-point.

- some authors argue that testing boundaries is enough. If the number of test cases is indeed too high, and it is just too expensive to do them all, prioritization is important, and we suggest testers to indeed focus on the boundaries.

- Off-point: The off-point is the value that is closest to the boundary and that flips the condition. If the on-point makes the condition true, the off point makes it false and vice versa. Note that when dealing with equalities or inequalities (e.g. x=6x = 6x=6 or x≠6x \neq 6x≠6), there are two off-points; one in each direction.

- In-points: In-points are all the values that make the condition true.

- Out-points: Out-points are all the values that make the condition false.

The problem is that this boundary is just less explicit from the requirements. Boundaries also happen when we are going from “one partition” to another. There is a “single condition” that we can use as clear source. In these cases, what we should do is to devise test cases for a sequence of inputs that move from one partition to another.

CORRECT way for boundary testing

- Conformance

- Test what happens when your input is not in conformance with what is expected. i.e. string instead of int, not an email, etc.

- Ordering

- Test different input order (i.e. sometimes the method only worked for sorted arrays)

- Range

- Test what happens when we provide inputs that are outside of the expected range. (i.e. negative numbers for age)

- Refference (for OOP methods)

- What it references outside its scope

- What external dependencies it has

- Whether it depends on the object being in a certain state

- Any other conditions that must exist

- Existence

- Does the system behave correctly when something that is expected to exist, does not? i.e. null pointer errors

- Cardinality

- Test loops in different situations, such as when it actually performs zero iterations, one iterations, or many.

- Time

- What happens if the system receives inputs that are not ordered in regards to date and time?

- Timing of successive events

- Does the system handle timeouts well?

- Does the system handle concurrency well? (multiple computations are happening at the same time.)

- Time formats and time zones

Domain testing

- combination of equivalent class analysis (the idea that inputs being equivalent to each other yield the same outcome, so just test 1 value for the partition, which was identified from the requirements and from the method inputs) and -> boundary testing (just test the on and off points)

- We read the requirement

- We identify the input and output variables in play, together with their types, and their ranges.

- We identify the dependencies (or independence) among input variables, and how input variables influence the output variable.

- We perform equivalent class analysis (valid and invalid classes).

- We explore the boundaries of these classes.

- We think of a strategy to derive test cases, focusing on minimizing the costs while maximizing fault detection capability.

- We generate a set of test cases that should be executed against the system under test.

Example

Basic structre:

Variables:

- a, explain

- b, explain

Dependencies:

I see some dependency between variables A and B, explain …

Equivalence partitioning and boundary analysis:

- variable A:

- partition 1

- partition 2

- variable B:

- partition 3

- partition 4

- (a, b)

- partition 5

- partition 6

- Boundaries

- boundary 1: explanation

- boundary 2: explanation

Structural testing

- Structural testing helped me in finding new test cases, A, B, and C…

- Domain tests and structural tests together achieve 100% branch+condition coverage.

Strategy:

- Combine everything from domain and structural testing…

- Total number of tests = 3

Test cases:

- T1 = …

- T2 = …

- T3 = …

Problem:

/**

* <p>Converts all the delimiter separated words in a String into camelCase,

* that is each word is made up of a titlecase character and then a series of

* lowercase characters.</p>

*

* <p>The delimiters represent a set of characters understood to separate words.

* The first non-delimiter character after a delimiter will be capitalized. The first String

* character may or may not be capitalized and it's determined by the user input for capitalizeFirstLetter

* variable.</p>

*

* <p>A <code>null</code> input String returns <code>null</code>.

* Capitalization uses the Unicode title case, normally equivalent to

* upper case and cannot perform locale-sensitive mappings.</p>

*

* @param str the String to be converted to camelCase, may be null

* @param capitalizeFirstLetter boolean that determines if the first character of first word should be title case.

* @param delimiters set of characters to determine capitalization, null and/or empty array means whitespace

* @return camelCase of String, <code>null</code> if null String input

*/

public String toCamelCase(String str, final boolean capitalizeFirstLetter, final char... delimiters) {

// ...

}

- The final char… delimiters allows you to pass any amount of delimiters by adding more char arguments. For example toCamelCase(“hello:world”, true, ‘:’, ‘;’) would have two delimiters. You can see this delimiters parameter as an array.

Answer:

- Variables:

- str - the original string

- capitalizeFirstLetter - boolean

- delimiters - array of chars

- output - CamelCased string

- Dependencies:

- There are no constraint dependencies among the input variables.

- Equivalence and Boundary Analysis:

- Variable str

- null

- empty

- non-empty single word

- non-empty multiple words

- Variable capitalizeFirstLetter

- true

- false

- Variable delimiters

- null

- no delimiter

- single delimiter

- multiple delimiters

- Variable delimiters, str:

- delimiter exists in the string

- delimiter does not exist in the string

- string consists of only delimiters

- Boundaries:

- No delimiter → single delimiter → multiple delimiters

- Single word → Multiple words

- Variable str

- Strategy

- Single tests for null and empty.

- Combine non-empty single word with all partitions in capitalize first letter

- Combine non-empty multiple words with (delimiters, str). Choose either true or false for capitalize first letter; we will not combine with it as the number of tests would grow very large

- Note that we choose words that are not already in the correct format.

- Test cases

- T1 null = (null, true, ‘.’) -> null

- T2 empty = (“”, true, ‘.’) -> “”

- T3 non-empty single word, capitalize first letter = (“aVOcado”, true, ‘.’) -> “Avocado”

- T4 non-empty single word, not capitalize first letter = (“aVOcado”, false, ‘.’) -> “avocado”

- T5 non-empty single word, capitalize first letter, no delimiter = (“aVOcado”, true) -> “Avocado”

- T6 non-empty single word, capitalize first letter, single existing delimiter = (“aVOcado”, true, ‘c’) -> “AvoAdo”

- T7 non-empty single word, capitalize first letter, single non-existing delimiter (skip as already tested with T4)

- T8 non-empty single word, capitalize first letter, multiple delimiters = (“aVOcado”, true, ‘c’, ‘d’) -> “AvoAO”

- T9 non-empty multiple words, capitalize first letter, no delimiter = (“aVOcado bAnana”, true) -> “AvocadoBanana”

- T10 non-empty multiple words, capitalize first letter, single existing delimiter = (“aVOcado-bAnana”, true, ‘-‘) -> “AvocadoBanana”

- T11 non-empty multiple words, capitalize first letter, single non-existing delimiter = (“aVOcado bAnana”, true, ‘x’) -> “AvocadoBanana”

- T12 non-empty multiple words, capitalize first letter, multiple delimiters, existing delimiter = (“aVOcado bAnana”, true, ‘ ‘, ‘n’) -> “AvocadoBaAA”

- T13 non-empty multiple words, capitalize first letter, multiple delimiters, non-existing delimiter = (“aVOcado bAnana”, true, ‘x’, ‘y’) -> “AvocadoBanana”

- T14 delimiters equal to null = (“apple”, true, null) -> “Apple”

- T15 only delimiters in the word = (“apple”, true, ‘a’, ‘p’, ‘l’, ‘e’) -> “apple”

package delft;

import static org.assertj.core.api.Assertions.*;

import java.util.stream.*;

import org.junit.jupiter.params.*;

import org.junit.jupiter.params.provider.*;

class Solution {

private final DelftCaseUtilities delftCaseUtilities = new DelftCaseUtilities();

@MethodSource("generator")

@ParameterizedTest(name = "{0}")

void domainTest(String name, String str, boolean firstLetter, char[] delimiters, String result) {

assertThat(delftCaseUtilities.toCamelCase(str, firstLetter, delimiters)).isEqualTo(result);

}

private static Stream<Arguments> generator() {

return Stream.of(Arguments.of("null", null, true, new char[]{'.'}, null),

Arguments.of("empty", "", true, new char[]{'.'}, ""),

Arguments.of("non-empty single word, capitalize first letter", "aVOcado", true, new char[]{'.'},

"Avocado"),

Arguments.of("non-empty single word, not capitalize first letter", "aVOcado", false, new char[]{'.'},

"avocado"),

Arguments.of("non-empty single word, capitalize first letter, no delimiters", "aVOcado", true,

new char[]{}, "Avocado"),

Arguments.of("non-empty single word, capitalize first letter, single delimiter", "aVOcado", true,

new char[]{'.'}, "Avocado"),

Arguments.of("non-empty single word, capitalize first letter, multiple delimiters", "aVOcado", true,

new char[]{'c', 'd'}, "AvoAO"),

Arguments.of("non-empty multiple words, capitalize first letter, no delimiters", "aVOcado bAnana", true,

new char[]{}, "AvocadoBanana"),

Arguments.of("non-empty multiple words, capitalize first letter, single existing delimiter",

"aVOcado-bAnana", true, new char[]{'-'}, "AvocadoBanana"),

Arguments.of("non-empty multiple words, capitalize first letter, single non-existing delimiter",

"aVOcado bAnana", true, new char[]{'x'}, "AvocadoBanana"),

Arguments.of("non-empty multiple words, capitalize first letter, multiple existing delimiters",

"aVOcado bAnana", true, new char[]{' ', 'n'}, "AvocadoBaAA"),

Arguments.of("non-empty multiple words, capitalize first letter, multiple non-existing delimiters",

"aVOcado bAnana", true, new char[]{'x', 'y'}, "AvocadoBanana"),

Arguments.of("delimiters is null", "apple", true, null, "Apple"),

Arguments.of("only delimiters in word", "apple", true, new char[]{'a', 'p', 'l', 'e'}, "apple"));

}

}

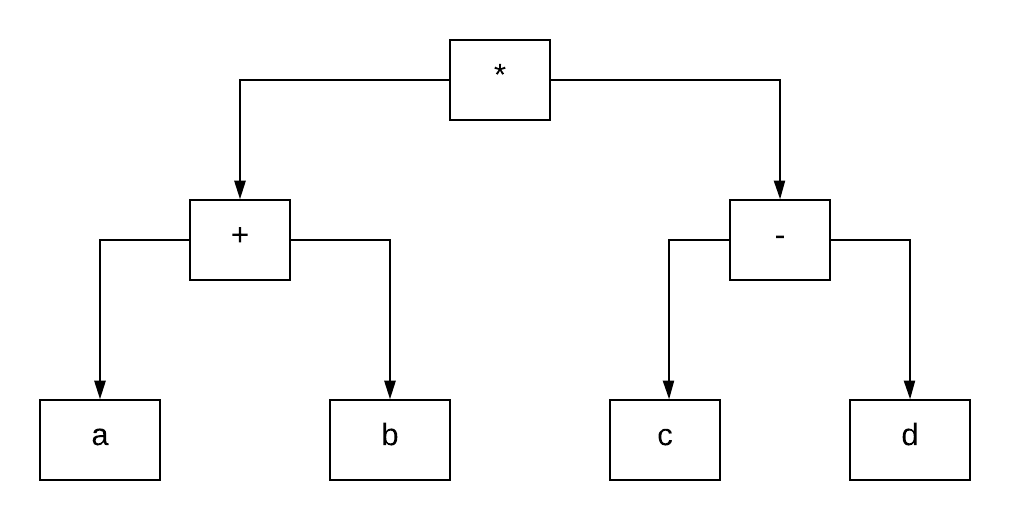

Structural testing

- Uses the source code to derive tests

- Helps us determine when to stop testing

Line coverage

- test line by line

- useful to do after doing requirements testing to fill in the coverage gaps

- Goal is that the line is exercised at least once by at least 1 test. It doesnt mean that all possible scenarios have been tested. Such is the case when all conditions are true, we can achieve 100% coverage, but we haven’t tested scenarios in which (some) conditions are false.

- Goal is to achieve 100% coverage

Block (Statement) coverage

- Normalizes the number of statement lines into blocks, as some developers might use more lines than others for the same block.

- Jacoco tests byte code level

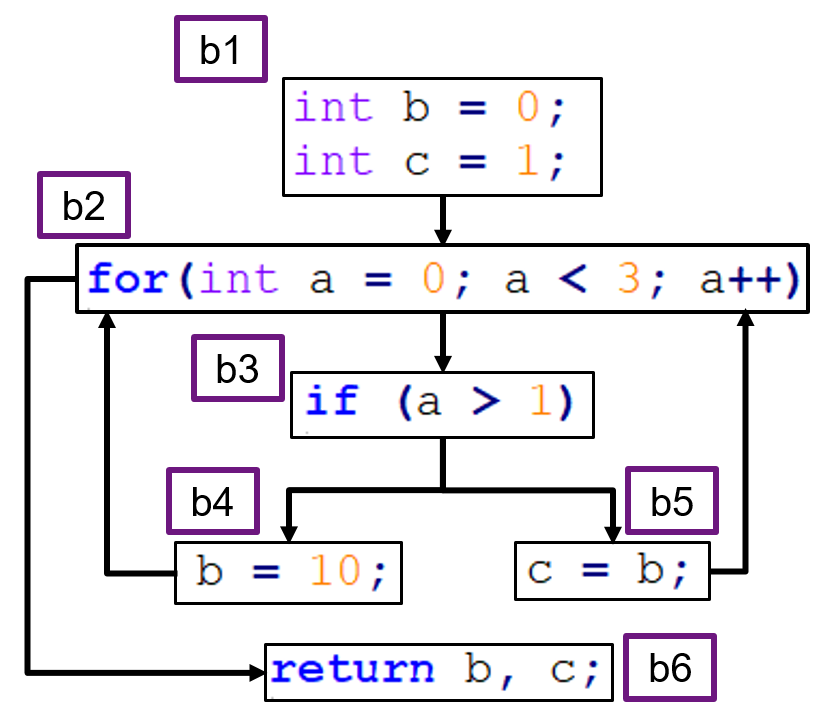

- Uses a control flow graph (CFG)

- A basic block is composed of “the maximum number of statements that are executed together no matter what happens”. That is, until a condition is hit (a decision block), which is a romboid with only 2 edges (true and false).

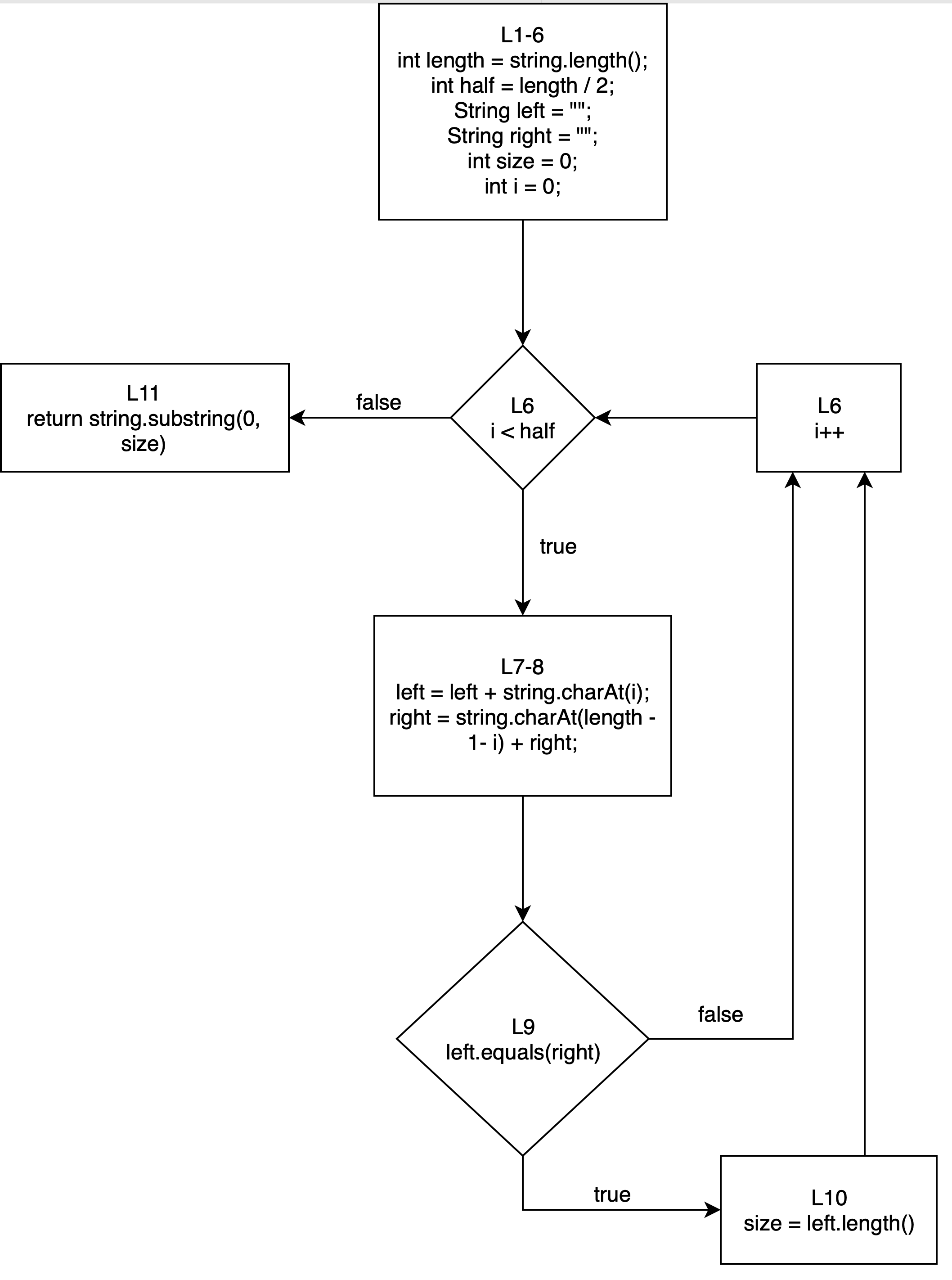

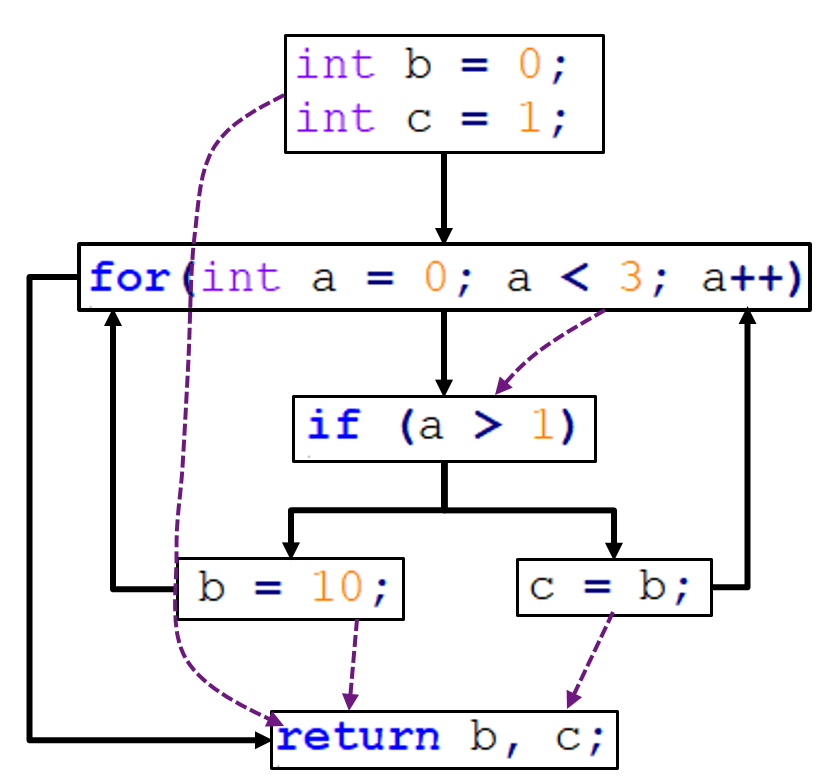

CFG example

public String sameEnds(String string) {

int length = string.length();

int half = length / 2;

String left = "";

String right = "";

int size = 0;

for (int i = 0; i < half; i++) {

left = left + string.charAt(i);

right = string.charAt(length - 1 - i) + right;

if (left.equals(right)) {

size = left.length();

}

}

return string.substring(0, size);

}

- we split the for loop into three blocks: the variable initialisation, the decision block, and the increment.

Branch (decision) coverage

- Make tests for each possible branch emerging from a condition

- Branch coverage gives two branches for each decision, no matter how complicated or complex the decision is

- Arrows with either true or false (i.e., both the arrows going out of a decision block) are branches, and therefore must be exercised.

- When a decision gets complicated, i.e., it contains more than one condition like a > 10 && b < 20 && c < 10, branch coverage might not be enough to test all the possible outcomes of all these decisions.

Condition coverage (Full branch coverage vs basic coverage)

- To do so we split the conditions into multiple decision blocks. This means each of the conditions will be tested separately, and not only the “big decision block”.

- Looking only at the conditions themselves while ignoring the overall outcome of the decision block is called basic condition coverage.

- Whenever we mention condition coverage or full condition coverage, we mean condition+branch coverage.

- A minimal test suite that achieves 100% branch coverage has the same number of test cases as a minimal test suite that achieves 100% full condition coverage.

- Be careful with lazy operators (&&, || …) as failing the first one will not exercise the next one(s) and thus might have impact on the condition coverage

Basic coverage:

- The basic condition coverage IS NOT 2^conditions (this is the path coverage), it’s just 2 x conditions (true or false)

Full coverage:

- Is the path coverage

Path coverage

- Whereas branch coverage only cares about executing a (sub-)condition as true and false, path coverage evaluates the (sub-)conditions in all the possible forms (i.e. a condition might have 4 variables so we need 2^4 tests to evaulate all scenarios)

- Path coverage does not consider the conditions individually. Rather, it considers the (full) combination of the conditions in a decision.

- It’s exponentially hard to achieve full path coverage, it is advised to just focus on the important ones.

- Another common criterion is the Multiple Condition Coverage, or MCC. To satisfy the MCC criterion, a condition needs to be exercised in all of its possible combinations. Path coverage is like this except for unbounded or long loops which may itereate an infinte number of times.

Boundary Adequacy

- Given that exhaustive testing is impossible, testers often rely on the loop boundary adequacy criterion to decide when to stop testing a loop:

- A test case exercises the loop zero times.

- A test case exercises the loop once.

- A test case exercises the loop multiple times.

- Rely on specification based techniques to determine the exact number of times to test.

Modified Condition/Decision Coverage (MC/DC)

- Instead of aiming at testing all the possible combinations, we follow a process in order to identify the “important” combinations.

- For N conditions you only need N+1 tests

- The idea of MC/DC is to exercise each condition in a way that it can, independently of the other conditions, affect the outcome of the entire decision. In short, this means that every possible condition of each parameter must have influenced the outcome at least once.

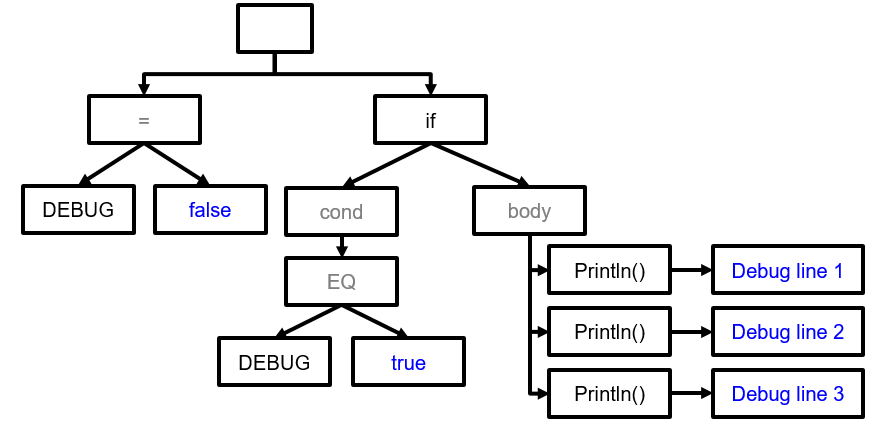

Design by contracts

Self testing

- Software systems that can test themselves

- Self-checks dont offer any user visible functionality they’re just an additional health check to report whether the system is working as expected

- Self-checks can be conducted during testing as well as live

- Self-checks are therefore included in the program itself (as opposed to the Junit tests)

Assertions

assert <condition> : "<message>";

public class MyStack(){

public Element pop() {

assert count() > 0;

// method body

}

}

- If the boolean expression

assert count() > 0is true, the program wont do nothing. - If the boolean expression is false, then it rasies an

AssertionError - However, assertion checking can be disabled at runtime.

- To enable the asserts, we have to run Java with a special argument in one of these two ways: java -enableassertions or java -ea. When using Maven or IntelliJ, the assertions are enabled automatically when running tests.

- When enabled, if an assert fails then it crashes the app, unless catched. Catching can only bring the system to a consistent state from which the app can be restarted.

- The idea of assertions is not to do logic checks (like those with illelArgumentException) but to act as sanity checks that the code has been written properly and does what it is supposed to do.

- You should never make a test that expects an assertion to fail. There must be no bug free scenario in which an assertion fails. That behaviour is undefined.

- They can also be used to expose interacting problems between modules (such as a third party library)

- Self-checks are more prevalent in DevOps

Test Oracles

- Informs us whether a piece of code has passed or failed

- Value comparision

- Version comparision

- Property checks

Assertions as Oracles

- Enable runtime assertion checking during testing

- Post-conditions check method outcomes

- Pre-conditions check correct method usage

- Invariants check object health

- Run time assertions increase fault sensitivity

- Increase likelihood program fails if there is a fault

- Desirable during testing

Pre- and post-conditions

- {P} A {Q} (also known as a Hoare Triple)

- given a state P, executing A yields Q

- Q = post-condition

- P = pre=condition

- preconditions are a design choice. Any adjustments we make in the preconditions can be moved to the body of the methodk

- A = method

assert PRECONDITION1;

assert PRECONDITION2;

//...

if (A) {

// ...

if (B) {

// ...

assert POSTCONDITION1;

return ...;

} else {

// ...

assert POSTCONDITION2;

return ...;

}

}

// ...

assert POSTCONDITION3;

return ...;

The method above has three conditions and three different return statements. This also gives us three post-conditions. In the example, if A and B are true, post-condition 1 should hold. If A is true but B is false, post-condition 2 should hold. Finally, if A is false, post-condition 3 should hold.

The placing of these post-conditions now becomes quite important, so the whole method is becoming rather complex with the post-conditions. Refactoring the method so that it has just a single return statement with a general post-condition is advisable. Otherwise, the post-condition essentially becomes a disjunction of propositions. Each return statement forms a possible post-condition (proposition) and the method guarantees that one of these post-conditions is met.

- The weaker the pre-conditions, the more situations a method is able to handle, and the less thinking the client needs to do. However, with weak pre-conditions, the method will always have to do the checking.

- The post-conditions are only guaranteed if the pre-conditions held; if not, the outcome can be anything. With weak pre-conditions the method might have to handle different situations, leading to multiple post-conditions.

Invariant

- Condition that holds throughout the lifetime of a system, an object or a data structure.

- (It is always true)

- A simple way of using invariants is by creating a “checker” method.

- Like the pre- & post- conditions, it throws

AssertionErrorwhen the boolean condition is false. - An invariant is often both a precondition and postcondition at the same time.

- Like the pre- & post- conditions, it throws

- Can also be implemented at the class level with

protected boolean invariant()method, which has to be asserted at the end of the class constructor method and at the start and end of each public method.- A class invariant ensures that its conditions will be true throughout the entire lifetime of the object.

- They can be used to test class hirearchies.

- A private method invoked by a public method can leave the object with the class invariant being false. However, the public method that invoked the private method should then fix this and end with the class invariant again being true.

- Static methods do not have invariants. Class invariants are related to the entire object, while static methods do not belong to any object (they are “stateless”), so the idea of (class) invariants does not apply to static methods.

Design by contracts

Interfaces as contracts

- A client and server are bound by a contract (where the pre conditions and post conditions are the respective binding clauses)

- The server promises to do its job (defined by the postconditions)

- As long as the client uses the server correctly (defined by the preconditions)

- These interfaces are used by the client and implemented by the server

Subcontracting

- In the UML diagram above, we see that the implementation can have different pre-, post-conditions, and invariants than its base interface.

- The implementation is a child of interface, therefore if we call a method of the implementation, it should be callable as often or more than the interface version. We want to ensure that the precondition of the interface is always met.

- P’ is weaker (or equal) than P

- The post condition of the interface must be always met, therefore the implementation precondition gets at least that done, or more.

- Q’ is stronger (or equal) than Q

- The invariant of the interface must always be true, therefore it must also be always true for the implementation.

- I’ is stronger (or equal) than I

Testing for Liskov Substitution Principle (LSP) Compliance

- Liskov substitution principle says that parent class tests hold for the children and hence these need not to be tested again, but inherit the test cases:

- Design test suite T at top interface level

- Reuse for all interface implementations

- Specific implementation may require additional tests, but should at least meet T

- We want to use the Factory Method design pattern in our tests for the List. We start by the interface level test class. Here, we define the abstract method that gives us a List.

public abstract class ListTest {

protected final List list = createList();

protected abstract List createList();

// Common List tests using list

}

- For this example, we create a class to test the ArrayList. We have to override the createList method and we can define any tests specific for the ArrayList.

public class ArrayListTest extends ListTest {

@Override

protected List createList() {

return new ArrayList();

}

// Tests specific for the ArrayList

}

- Now, the ArrayListTest inherits all the ListTest’s tests, so these will be executed when we execute the ArrayListTest test suite. Because the createList() method returns an ArrayList, the common test classes will use an ArrayList.

Example

package delft;

import java.util.HashMap;

import java.util.Map;

/** A square playing board. */

abstract class Board {

/** The size of the board. */

protected int size;

/**

* Creates a Board with a certain size.

*

* @param size

* the size of the board

* @throws IllegalArgumentException

* if the size is negative

*/

protected Board(int size) {

if (size < 0) {

throw new IllegalArgumentException("The size of the board cannot be negative.");

}

this.size = size;

}

/**

* Returns the Unit at a position of the board. If no such a Unit has been set

* before, it will return UNKNOWN.

*

* @param x

* x coordinate

* @param y

* y coordinate

* @return the unit at (x,y)

*/

public abstract Unit getUnit(int x, int y);

/**

* Sets the unit of a certain position on the board.

*

* @param x

* x coordinate

* @param y

* y coordinate

* @param unit

* the new unit for the position (x,y)

*/

public abstract void setUnit(int x, int y, Unit unit);

/**

* Checks whether the coordinates are in range and throws an exception if they

* aren't.

*

* @param x

* x coordinate

* @param y

* y coordinate

* @throws IllegalArgumentException

* when the coordinates are out of the range of the board

*/

protected void checkCoordinatesRange(int x, int y) {

if (x < 0 || x >= size || y < 0 || y >= size) {

throw new IllegalArgumentException(String.format("The position (%d, %d) does not exist.", x, y));

}

}

}

class MapBoard extends Board {

private final Map<Integer, Map<Integer, Unit>> board;

public MapBoard(int size) {

super(size);

board = new HashMap<>();

}

@Override

public Unit getUnit(int x, int y) {

checkCoordinatesRange(x, y);

if (board.containsKey(x) && board.get(x).containsKey(y)) {

return board.get(x).get(y);

}

return Unit.UNKNOWN;

}

@Override

public void setUnit(int x, int y, Unit unit) {

checkCoordinatesRange(x, y);

if (!board.containsKey(x)) {

board.put(x, new HashMap<>());

}

board.get(x).put(y, unit);

}

}

class ArrayBoard extends Board {

private final Unit[][] board;

public ArrayBoard(int size) {

super(size);

board = new Unit[size][size];

for (int i = 0; i < size; i++) {

for (int j = 0; j < size; j++) {

board[i][j] = Unit.UNKNOWN;

}

}

}

@Override

public Unit getUnit(int x, int y) {

checkCoordinatesRange(x, y);

return board[x][y];

}

@Override

public void setUnit(int x, int y, Unit unit) {

checkCoordinatesRange(x, y);

board[x][y] = unit;

}

}

enum Unit {

FRIEND, ENEMY, UNKNOWN

}

package delft;

import static org.assertj.core.api.Assertions.*;

import static org.junit.jupiter.api.Assertions.*;

import java.util.*;

import java.util.stream.*;

import org.junit.jupiter.api.*;

import org.junit.jupiter.params.*;

import org.junit.jupiter.params.provider.*;

abstract class BoardTest {

protected final Board board = createBoard(7);

abstract Board createBoard(int size);

@Test

void illegalSizeTest() {

assertThatThrownBy(() -> createBoard(-3)).isInstanceOf(IllegalArgumentException.class);

}

@Test

void createEmptyBoardTest() {

assertThat(createBoard(0)).isNotNull();

}

@MethodSource("illegalCoordinateBoundaryGenerator")

@ParameterizedTest(name = "illegal get coordinate ({0},{1}) test")

void getIllegalCoordinateTest(int x, int y) {

assertThatThrownBy(() -> board.getUnit(x, y)).isInstanceOf(IllegalArgumentException.class);

}

@MethodSource("illegalCoordinateBoundaryGenerator")

@ParameterizedTest(name = "illegal set coordinate ({0},{1}) test")

void setIllegalCoordinateTest(int x, int y) {

assertThatThrownBy(() -> board.setUnit(x, y, Unit.FRIEND)).isInstanceOf(IllegalArgumentException.class);

}

private static Stream<Arguments> illegalCoordinateBoundaryGenerator() {

return Stream.of(Arguments.of(-1, 2), Arguments.of(3, -1), Arguments.of(7, 2), Arguments.of(4, 7));

}

@MethodSource("validCoordinateBoundaryGenerator")

@ParameterizedTest(name = "valid get coordinate ({0}, {1}) test")

void boundaryGetTest(int x, int y) {

assertThat(board.getUnit(x, y)).isEqualTo(Unit.UNKNOWN);

}

private static Stream<Arguments> validCoordinateBoundaryGenerator() {

return Stream.of(Arguments.of(0, 3), Arguments.of(3, 0), Arguments.of(6, 2), Arguments.of(4, 6));

}

@Test

void getUnknownTest() {

assertThat(board.getUnit(6, 2)).isEqualTo(Unit.UNKNOWN);

}

@Test

void getPreviouslySetTest() {

board.setUnit(3, 2, Unit.ENEMY);

assertThat(board.getUnit(3, 2)).isEqualTo(Unit.ENEMY);

}

@Test

void getOtherElementOfPreviouslySetRowTest() {

board.setUnit(3, 2, Unit.ENEMY);

assertThat(board.getUnit(3, 4)).isEqualTo(Unit.UNKNOWN);

}

@Test

void setPreviouslySetTest() {

board.setUnit(0, 1, Unit.ENEMY);

board.setUnit(0, 1, Unit.FRIEND);

assertThat(board.getUnit(0, 1)).isEqualTo(Unit.FRIEND);

}

}

class MapBoardTest extends BoardTest {

@Override

Board createBoard(int size) {

return new MapBoard(size);

}

}

class ArrayBoardTest extends BoardTest {

@Override

Board createBoard(int size) {

return new ArrayBoard(size);

}

}

Property-Based testing

- Generates random inputs for the tests based on QuickCheck tool

public class PropertyTest {

@Property

void concatenationLength(@ForAll String s1, @ForAll String s2) {

String s3 = s1 + s2;

Assertions.assertEquals(s1.length() + s2.length(), s3.length());

}

}

- QuickCheck is a property specification language/library

- Data input generator for most default data types

- Mechanism to write your custom object generators

- Mechanism to constrain data generated (junit assume)

- Shrinking process to reduce inputs for failing tests to smallest input

- The java implementation we use is jqwik

Automated Self-Testing

- Random input generation

- Exercise system in variety of ways

- Clever generators for specific data types

- Whole test suite perspective

- Maximize coverage achieved by inputs

- Capture in fitness function

- Evolutionary search for fittest test suite

- Properties, contracts, assertions

- The oracle distinguishing success from failure

Examples

package delft;

class TaxIncome {

public static final double CANNOT_CALC_TAX = -1;

public double calculate(double income) {

if (0 <= income && income < 22100) {

return 0.15 * income;

} else if (22100 <= income && income < 53500) {

return 3315 + 0.28 * (income - 22100);

} else if (53500 <= income && income < 115000) {

return 12107 + 0.31 * (income - 53500);

} else if (115000 <= income && income < 250000) {

return 31172 + 0.36 * (income - 115000);

} else if (250000 <= income) {

return 79772 + 0.396 * (income - 250000);

}

return CANNOT_CALC_TAX;

}

}

package delft;

import static org.assertj.core.api.Assertions.*;

import static org.junit.jupiter.api.Assertions.*;

import java.util.*;

import java.util.stream.*;

import net.jqwik.api.*;

import net.jqwik.api.arbitraries.*;

import net.jqwik.api.constraints.*;

import org.junit.jupiter.api.*;

import org.junit.jupiter.params.*;

import org.junit.jupiter.params.provider.*;

class TaxIncomeTest {

private final TaxIncome taxIncome = new TaxIncome();

@Property

void tax22100max(@ForAll @DoubleRange(min = 0, max = 22100, maxIncluded = false) double income) {

assertEquals(taxIncome.calculate(income), 0.15 * income, Math.ulp(income));

}

@Property

void tax53500max(@ForAll @DoubleRange(min = 22100, max = 53500, maxIncluded = false) double income) {

assertEquals(taxIncome.calculate(income), 3315 + 0.28 * (income - 22100), Math.ulp(income));

}

@Property

void tax115000max(@ForAll @DoubleRange(min = 53500, max = 115000, maxIncluded = false) double income) {

assertEquals(taxIncome.calculate(income), 12107 + 0.31 * (income - 53500), Math.ulp(income));

}

@Property

void tax250000max(@ForAll @DoubleRange(min = 115000, max = 250000, maxIncluded = false) double income) {

assertEquals(taxIncome.calculate(income), 31172 + 0.36 * (income - 115000), Math.ulp(income));

}

@Property

void tax250000min(@ForAll @DoubleRange(min = 250000) double income) {

assertEquals(taxIncome.calculate(income), 79772 + 0.396 * (income - 250000), Math.ulp(income));

}

@Property

void invalid(@ForAll @Negative double income) {

assertEquals(taxIncome.calculate(income), -1, Math.ulp(income));

}

}

Testing Pyramid

- A large software system is composed of many units and responsibilities.

- As you climb the levels in the diagram, the tests become more realistic. At the same time, the tests also become more complex on the higher levels.

- The size of the pyramid slice represents the number of tests one would want to carry out at each test level.

Unit testing

- Testing a single feature while purposefully ignoring other units of the systems.

- A unit can span a single method, a whole class or multiple classes working together to achieve one single logical purpose that can be verified.

- Write unit tests when the component is about an algorithm or a single piece of business logic of the software system.

- Business logic often does not depend on external services and so it can easily be tested and fully exercised by means of unit tests.

- The way you design your classes has a high impact on how easy it is to write unit tests for your code.

Unit testing pros

- Fast execution

- Constant feedback allows for smooth evolutionary changes on the software

- Easy to control (easy to change inputs and expected outputs).

- Easy to write (easy setup and small tests)

Unit testing cons

- Lack of reality: Unit tests do not perfectly represent the real execution of a software system.

- Some types of bugs are not caught: Some types of bugs cannot be caught at unit test level. They only happen in the integration of the different components.

System testing

- Also known as black box testing (the system itself is regarded as a black box as we do not know everything that goes on inside of the system)

- Testing the system in its entirety (databases, front-end apps, etc.)

- Write system tests only after a risk-based approach where it is shown which parts of the systems are very bug prone, or where a specific part of the system must always be running, like the payment services of a webshop.

Black box testing pros

- Tests are realistic

- Tests capture the user perspective better than unit tests.

Black box testing cons

- Slower than unit tests

- Hard to write (especially setting up components for a testing scenario)

- They are “flaky” (fragile and bug prone): Due to their integration complexity often tests are not robust and do not manage to get the job done and deliver inconsistent results.

Integration testing

- Unit tests in isolation, blacbox tests the whole system, integration testing tests in between.

- The goal of integration testing is to test multiple components of a system together, focusing on the interactions between them instead of testing the system as a whole.

- Write integration tests whenever the component under test interacts with an external component (e.g., a database or a web service) integration tests are appropriate.

- making sure that the component that performs the integration is solely responsible for that integration and nothing else (i.e., no business rules together with integration code), will reduce the cost of the testing.

Integration testing pros

- Easier to write and less effort than system testing

Integration testing cons

- More complicated than a unit test

- The more integrated our tests are, the more difficult they are to write. In the example, setting up a database for the test requires effort. Tests that involve databases usually need to:

- make use of an isolated instance of the database just for testing purposes (as you probably do not want your tests to mess with production data),

- update the database schema (in fast companies, database schemas are changing all the time, and the test database needs to keep up),

- put the database into a state expected by the test by adding or removing rows,

- and clean everything afterwards (so that the next tests do not fail because of the data that was left behind by the previous test).

Manual testing

- Avoid them

Tsting pyramid at google

- Small test = unit test

- Medium test = integration test

- Large tests = system test

Test doubles

- We face challenges when unit testing classes that depend on other classes or on external infrastructure.

- It may creat an implicit dependency on a database, eventhough we dont really care about db behaviour. Therefore, we can unit test something assuming it’s dependencies work.

- To test A we mock the behaviour of component B. Within the test, we have full control over what this “fake component B” does so we can make it behave as B would in the context of this test and cut the dependency on the real object.

Test doubles pros

- We have more control: We can easily tell these objects what to do, without the need for complicated setups.

- Simulations are also faster: instead of waiting for a database API we can just return what we assume to be returned.

Types of doubles

- Dummy objects: Creating an object whose attributes are fake just for the porpuse of testing a method of that class

- Fake objects: They’re a poorman’s version of a more complex class, i.e. using an array list as a database object. They’re nonetheless working implmentations of the class they simulate.

- Stubs (Fixed Mockito): Stubs provide hard-coded answers to the calls that are performed during the test. Stubs do not actually have a working implementation, as fake objects do. Stubs do not know what to do if the test calls a method for which it was not programmed and/or set up.

- Spies: As the name suggests, spies “spy” a dependency. It wraps itself around the object and observes its behaviour. Strictly speaking it does not actually simulate the object, but rather just delegates all interactions to the underlying object while recording information about these interactions. Imagine you just need to know how many times a method X is called in a dependency: that is when a spy would come in handy. Example:

// this is a real list

List<String> list = new ArrayList<String>();

// this is a spy that will spy on the concrete list

// that is in the 'list' variable

List<String> spy = Mockito.spy(list);

// ...

spy.add(1); // this will call the concrete add() method

// ...

Mockito.verify(spy).add(1); // this will check whether add() was called

Furthermore, verify() is not limited to just mock spied objects, can also be used on mocked objects.

- Mocks: Mock objects act like stubs that are pre-configured ahead of time to know what kind of interactions should occur with them.

Mockito

- In general, we do not mock the class under test.

mock(<class>): creates a mock object/stub of a given class. The class can be retrieved from any class by.class. when(<mock>.<method>).thenReturn(<value>): defines the behaviour when the given method is called on the mock. In this casewill be returned. verify(<mock>).<method>: asserts that the mock object was exercised in the expected way for the given method.

import static java.util.Arrays.asList;

import static org.assertj.core.api.Assertions.assertThat;

import static org.mockito.Mockito.when;

public class InvoiceFilterTest {

private final IssuedInvoices issuedInvoices = Mockito.mock(IssuedInvoices.class);

private final InvoiceFilter filter = new InvoiceFilter(issuedInvoices);

@Test

void filterInvoices() {

final var mauricio = new Invoice("Mauricio", 20);

final var steve = new Invoice("Steve", 99);

final var arie = new Invoice("Arie", 300);

when(issuedInvoices.all()).thenReturn(asList(mauricio, arie, steve));

assertThat(filter.lowValueInvoices()).containsExactlyInAnyOrder(mauricio, steve);

}

}

- Mockito does not allow us to stub static methods (although some other more magical mock frameworks do). Static calls are indeed enemies of testability, as they do not allow for easy stubbing.

- It can be worked around by creating abstractions on top of dependencies that you do not own, which is a common technique among developers.

Mocking and stabbing

Stubbing means simply returning hard-coded values for a given method call. Mocking means not only defining what methods do, but also explicitly defining how the interactions with the mock should be.

Mockito actually enables us to define even more specific expectations. For example, see the expectations below:

verify(sap, times(2)).send(any(Invoice.class));

verify(sap, times(1)).send(mauricio);

verify(sap, times(1)).send(steve);

Developers often mock/stub the following types of dependencies:

- Dependencies that are too slow: If the dependency is too slow, for any reason, it might be a good idea to simulate that dependency.

- Dependencies that communicate with external infrastructure: If the dependency talks to (external) infrastructure, it might be too complex to be set up. Consider stubbing it.

- Hard to simulate cases: If we want to force the dependency to behave in a hard-to-simulate way, mocks/stubs can help.

Developers tend not to mock/stub:

- Entities. An entity is a simple class that mirrors a collection in a database, while instances of this class mirror the entries of that collection.

- Native libraries and utility methods. It is not common to mock/stub libraries that come with our programming language and utility methods. For example, why would one mock ArrayList or a call to String.format? As shown with the Calendar example above, any library or utility methods that harm testability can be abstracted away.

Trade-off:

- Whenever you mock, you reduce the reality of the test

- Mocks need to be updated as the objects they mock change

Mocking at google

- Using test doubles requires the system to be designed for testability. Dependency injection is the common technique to enable test doubles.

- Building test doubles that are faithful to the real implementation is challenging. Test doubles have to be as faithful as possible.

- Prefer realism over isolation. When possible, opt for the real implementation, instead of fakes, stubs, or mocks.

- Some trade-offs to consider when deciding whether to use a test double: the execution time of the real implementation, how much non-determinism we would get from using the real implementation.

- When using the real implementation is not possible or too costly, prefer fakes over mocks. An in-memory database, for example, might be better (or more real) than a mock.

- Excessive mocking can be dangerous, as tests become unclear (i.e., hard to comprehend), brittle (i.e., might break too often), and less effective (i.e., reduced fault capability detection).

- When mocking, prefer state testing rather than interaction testing. In other words, make sure you are asserting a change of state and/or the consequence of the action under test, rather than the precise interaction that the action has with the mocked object. After all, interaction testing tends to be too coupled with the implementation of the system under test.

- Use interaction testing when state testing is not possible, or when a bad interaction might have an impact in the system (e.g., calling the same method twice would make the system twice as slow).

- Avoid overspecified interaction tests. Focus on the relevant arguments and functions.

- Good interaction testing requires strict guidelines when designing the system under test. Google engineers tend not to do it.

Design for testability

- Testability is the term used to describe how easy it is to write automated tests for the system, class, or method to be tested.

Dependency injection

- Dependency injection is a design choice we can use to make our code more testable.

- Instead of the class instantiating the dependency itself, the class asks for the dependency (via constructor or a setter, for example).

- Example:

applyDiscount(){//check the Calendar static field date and apply discount}vsapplyDiscount(date){//apply discount based on passed parameters}, the second option makes the creation of automated tests easier and, therefore, increases the testability of the code.

- The use of dependency injection improves our code in many ways:

- It enables us to mock/stub the dependencies in the test code, increasing the productivity of the developer during the testing phase.

- It makes all the dependencies more explicit; after all, they all need to be injected (via constructor, for example).

- It affords better separation of concerns: classes now do not need to worry about how to build their dependencies, as they are injected to them.

- With Java, you generally need dependency injection to be able to mock dependencies, whereas JavaScript allows you to mock any imported dependency without changing the production code.

Domain vs infrastructure

- The domain is where the core of the system lies, i.e. where all the business rules, logic, entities, services, etc, reside.

- Infrastructure relates to all code that handles some infrastructure. For example, pieces of code that handle database queries, or webservice calls, or file reads and writes.

- When domain code and infrastructure code are mixed up together, the system becomes harder to test.

- Try to keep these 2 things separate from each other when making methods.

Implementation-level tips on designing for testability

- Cohesion and testability: cohesive classes are classes that do only one thing. Cohesive classes tend to be easier to test as a non-cohesive class requires exponential test cases.

- Coupling and testability: Coupling refers to the number of classes that a class depends on. A highly coupled class requires several other classes to do its work. Coupling decreases testability.

- Complex conditions and testability: Reducing the complexity of such conditions, for example by breaking it into multiple smaller conditions, will not reduce the overall complexity of the problem, but will “spread” it.

- Private methods and testability: In principle, testers should test private methods only through their public methods. Otherwise you should refactor it: extract this method, maybe to a brand new class. There, the former private method, now a public method, can be tested normally by the developer. The original class, where the private method used to be, should now depend on this new class.

- Static methods and testability: static methods adversely affect testability, as they can not be stubbed easily. Therefore, a good rule of thumb is to avoid the creation of static methods whenever possible. Exceptions to this rule are utility methods. As we saw before, utility methods are often not mocked.

- If your system has to depend on a specific static method, e.g., because it comes with the framework your software depends on, adding an abstraction on top of it might be a good decision to facilitate testability.

Web testing

Simplified architecture of a web application:

Javascript front-end

- Try to separate javascript from HTML

- Apply some kind of modular design, creating small, independent components that are easily tested

- Use a JavaScript library or framework (like Vue.js, React or Angular) in order to achieve such a structure

- Do a significant amount of refactoring to make the code testable

Client-server model

- The fact that the server side can be written in any programming language you like, means that you can stick to your familiar testing ecosystem there

- It poses challenges, like possibly having different programming languages and corresponding ecosystems on the client and server side

- Just testing the front and back end separately will probably not cut it

- Reflect how a user uses the application by performing end-to-end tests

- You have to have a web server running while executing such a test

- Server needs to be in the right state (especially if it uses a database), and the versions of front and back end need to be compatible

Everyone can access your application

- The users will have very different backgrounds. This makes usability testing and accessibility testing testing a priority

- The number of users of your application may become very high at any given point in time, so load testing is a wise thing to do

- Some of those users may have malicious intent. Therefore security testing is of utmost importance

The front end runs in a browser

- Different users use different versions of different browsers. Cross-browser testing helps to ensure that your application will work in the browsers you support

- Test for responsive web design to make sure the application will look good in browsers with different sizes, running on different devices

- HTML should be designed for testability, so that you can select elements, and so that you can test different parts of the user interface (UI) independently

- UI component testing can be considered a special case of unit testing: here your “unit” is one part of the Document Object Model (DOM), which you feed with certain inputs, possibly triggering some events (like “click”), after which you check whether the new DOM state is equal to what you expected

- Snapshot testing can help you to make sure your UI does not change unexpectedly

- To perform end-to-end tests automatically, you will have to somehow control the browser and make it simulate user interaction. Two well-known tools for this are Selenium WebDriver and Cypress.

Selenium WebDriver example

import org.junit.jupiter.api.Test;

import org.openqa.selenium.By;

import org.openqa.selenium.WebDriver;

import org.openqa.selenium.WebElement;

import org.openqa.selenium.firefox.FirefoxDriver;

import static org.assertj.core.api.Assertions.assertThat;

public class SeleniumWebDriverTest {

@Test

void spanishTitle() {

System.setProperty("webdriver.gecko.driver", "C:\\Users\\sergio\\OneDrive - Delft University of Technology\\CSE\\Y1\\Q4\\CSE1110 Software Quality and Testing\\geckodriver-v0.29.1-win64\\geckodriver.exe");

WebDriver driver = new FirefoxDriver();

driver.get("https://www.wikipedia.org");

WebElement link;

link = driver.findElement(By.id("js-link-box-es"));

link.click();

String title;

title = driver.getTitle();

assertThat(title).isEqualTo("Wikipedia, la enciclopedia libre");

}

}

Many web applications are asynchronous

- Most of the requests to the server are done in an asynchronous manner: while the browser is waiting for results from the server, the user can continue to use the application

- When writing unit tests, and end-to-end tests, you have to account for this by “awaiting” the results from the (mocked) server call before you can check whether the output of your unit matches what you expected

- This can leaad to flaky tests

- You either have to write custom code to make your tests more robust, or use a tool like Cypress, which has retry-and-timeout logic built-in

Javascript Unit testing

- Reasons why it is difficult or impossible to write unit tests for this piece of code:

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="utf-8" />

<title>Date incrementer - version 1</title>

</head>

<body>

<p>Date will appear here.</p>

<button onclick="incrementDate(this)">+1</button>

<script>

function incrementDate(sender) {

var p = sender.parentNode.children[0];

var date = new Date(p.innerText);

date.setDate(date.getDate() + 1);

p.innerText = date.toISOString().slice(0, 10);

}

window.onload = function () {

var p = document.getElementsByTagName("p")[0];

p.innerText = new Date().toISOString().slice(0, 10);

}

</script>

</body>

</html>

- the JavaScript code is inline with the rest of the page (You cannot run the code without also running the rest of the page, so you cannot test the functions separately, as a unit). Just refactor it into seprate files.

- incrementDate() function is a mix of date logic and user interface (UI) code. These parts cannot be tested separately. We have also become dependent on the implementation of the date conversion functions. We should solve these problems by splitting up the incrementDate() function into different functions and storing the currently shown date in a variable.

- Another problem is that the initial date value is hard-coded: the code always uses the current date (by calling new Date()), so you cannot test what happens with cases like “February 29th, 2020”.

- The <p> element was difficult to locate using the standard JavaScript query selectors, and we resorted to abusing the DOM structure to find it. We thereby unnecessarily imposed restrictions on the DOM structure (the <p> element must now be on the same level as the button, and it must be the first element)

- For your UI tests, you should make sure that you can reliably select the elements you use.

- We can achieve this by adding an id to the element and then selecting it by using getElementById().

- In general, it is better to find elements in the same way that users find them (for example, by using labels in a form).

The aforementioned issues are solved by refactoring the code and splitting it up into three files. The first one (dateUtils.js) contains the utility functions for working with dates, which can now nicely be tested as separate units:

// Advances the given date by one day.

function incrementDate(date) {

date.setDate(date.getDate() + 1);

}

// Returns a string representation of the given date

// in the format yyyy-MM-dd.

function dateToString(date) {

return date.toISOString().slice(0, 10);

}

The second one (dateIncrementer.js) contains the code for keeping track of the currently shown date and the UI interaction:

function DateIncrementer(initialDate, dateElement) {

this.date = new Date(initialDate.getTime());

this.dateElement = dateElement;

}

DateIncrementer.prototype.increment = function () {

incrementDate(this.date);

this.updateView();

};

DateIncrementer.prototype.updateView = function () {

this.dateElement.innerText = dateToString(this.date);

};

The third one is the refactored HTML file (dateIncrementer2.html) that uses our newly created JavaScript files:

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="utf-8" />

<title>Date incrementer - version 2</title>

</head>

<body>

<p id="pDate">Date will appear here.</p>

<button id="btnIncrement">+1</button>

<script src="dateUtils.js"></script>

<script src="dateIncrementer.js"></script>

<script>

window.onload = function () {

var incrementer = new DateIncrementer(

new Date(), document.getElementById("pDate"));

var btn = document.getElementById("btnIncrement");

btn.onclick = function () { incrementer.increment(); };

incrementer.updateView();

}

</script>

</body>

</html>

The date handling logic can now be tested separately, as well as the UI code. The initial date can now be supplied as an argument. The <p> element can now be found by its ID. We should now be ready to write some tests!

Javascript Unit test without framework

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="utf-8" />

<title>Date utils - Test</title>

</head>

<body>

<p>View the console output for test results.</p>

<script src="dateUtils.js"></script>

<script>

function assertEqual(expected, actual) {

if (expected != actual) {

console.log("Expected " + expected + " but was " + actual);

}

}

/*

Be aware that in JavaScript Date objects, months are zero-based,

meaning that month 0 is January, month 1 is February, etc.

So "new Date(2020, 1, 29)" represents February 29th, 2020.

*/

// Test 1: incrementDate should add 1 day a given date object

var date1 = new Date(2020, 1, 29); // February 29th, 2020

incrementDate(date1);

// This succeeds:

assertEqual(new Date(2020, 2, 1).getTime(), date1.getTime());

// Test 2: dateToString should return the date in the form "yyyy-MM-dd"

var date2 = new Date(2020, 4, 1); // May 1st, 2020

// This fails because of time zone issues

// (the actual value is "2020-04-30"):

assertEqual("2020-05-01", dateToString(date2));

</script>

</body>

</html>

Javascript UI test without framework

- It has to be done manually

- The state of the UI is not reset after the UI tests (so it’s a problem if you want to test more tests afterwards)

JavaScript unit testing (with React and Jest)

- Create a new React application using the Create React App tool

- This creates a project structure with the necessary dependencies and includes the unit testing framework Jest.

import { addOneDay, dateToString } from './dateUtils';

describe('addOneDay', () => {

test('handles February 29th', () => {

const oldDate = new Date(2020, 1, 29); // February 29th, 2020

const newDate = addOneDay(oldDate);

expect(newDate).toEqual(new Date(2020, 2, 1));

});

});

describe('dateToString', () => {

test('returns the date in the form "yyyy-MM-dd"', () => {

var date = new Date(2020, 4, 1); // May 1st, 2020

expect(dateToString(date)).toEqual("2020-05-01");

});

});

- We can now take advantage of the module system. We do not have to load the functions into global scope any more, but just import the functions we need.

- We use the Jest syntax, where describe is optionally used to group several tests together, and test is used to write a test case, with a string describing the expected behaviour and a function executing the actual test. The body of the test function uses the “fluent” syntax expect(…).toEqual(…)

Tests for the UI with Jest

- Here you see a similar Jest unit test structure, but we also use react-testing-library to render the UI component to a virtual DOM. In react-testing-library, you are encouraged to test components like a user would test them. This is why we use functions like getByText to look up elements. This also means that we did not have to include any ids or other ways of identifying the <p> and the < button > in the component.

import React from 'react';

import { render, fireEvent } from '@testing-library/react';

import DateIncrementer from './DateIncrementer';

test('renders initial date', () => {

const { getByText } = render(<DateIncrementer initialDate={new Date(2020, 0, 1)} />);

const dateElement = getByText("2020-01-01");

expect(dateElement).toBeInTheDocument();

});

test('updates correctly when clicking the "+1" button', () => {

const date = new Date(2020, 0, 1);

const { getByText } = render(<DateIncrementer initialDate={date} />);

const button = getByText("+1");

fireEvent.click(button);

const dateElement = getByText("2020-01-02");

expect(dateElement).toBeInTheDocument();

});

Jest mocks

jest.mock('./dateUtils');

import React from 'react';

import { render, fireEvent } from '@testing-library/react';

import { dateToString, addOneDay } from './dateUtils';

import DateIncrementer from './DateIncrementer';

test('renders initial date', () => {

dateToString.mockReturnValue("mockDateString");

const date = new Date(2020, 0, 1);

const { getByText } = render(<DateIncrementer initialDate={date} />);

const dateElement = getByText("mockDateString");

expect(dateToString).toHaveBeenCalledWith(date);

expect(dateElement).toBeInTheDocument();

});

test('updates correctly when clicking the "+1" button', () => {

const mockDate = new Date(2021, 6, 7);

addOneDay.mockReturnValueOnce(mockDate);

const date = new Date(2020, 0, 1);

const { getByText } = render(<DateIncrementer initialDate={date} />);

const button = getByText("+1");

fireEvent.click(button);

expect(addOneDay).toHaveBeenCalledWith(date);

expect(dateToString).toHaveBeenCalledWith(mockDate);

});

- Here, jest.mock(‘./dateUtils’) replaces every function that is exported from the dateUtils module by a mocked version. You can then provide alternative implementations with functions like mockReturnValue, and check whether the functions have been called with functions like expect(…).toHaveBeenCalledWith(…).

- The version with mocks is less ‘real’ than the one without. The one without mocks is arguably preferable. However, you could use the same mechanism for things like HTTP requests to a back end, and in that case mocking would certainly be helpful.

Snapshot testing

- Snapshot testing is useful if you want detect unexpected changes to the component output

- If all you want to do is make sure that your UI does not change unexpectedly, snapshot tests are a good fit.

- The first time you run a snapshot test, it takes a snapshot of the component as it is rendered in that initial run. That first time, the test will always pass. Then in all subsequent runs, the rendered output is compared to the snapshot. If the output is different, the test fails and you are presented with the differences between the two versions.

- The test runner allows you to inspect the differences and decide whether the changes are what you intended. You can then either change the component so that its output corresponds to the snapshot, or you press a button to update the snapshot and mark the newly rendered output as the correct one.

In Jest, such a test can look like this:

test('renders correctly via snapshot', () => {

const { container } = render(<DateIncrementer initialDate={new Date(2020, 0, 1)} />);

expect(container).toMatchSnapshot();

});

On the first run, this creates a file called DateIncrementer.test.js.snap with the following contents:

// Jest Snapshot v1, https://goo.gl/fbAQLP

exports[`renders correctly via snapshot 1`] = `

<div>

<div>

<p>

2020-01-01

</p>

<button>

+1

</button>

</div>

</div>

`;

On subsequent runs, the output of the test is compared to the corresponding snapshot.

The created snapshot file should be committed to your version control system (like Git), so that your tests can also run on your Continuous Integration (CI) system.

End-to-end testing

- The goal of end-to-end testing is to test the flow through the application as a user might follow it, while integrating the various components of the web application (such as the front end, back end and database)

- You should make this as realistic as possible, so you use an actual browser and perform the tests on a production-like version of the application components

- A well-known tool for this is Selenium WebDriver. It basically acts as a “remote control” for your browser, so you can instruct it to “open this page, click this button, wait for that element to appear”, etc. You write these tests in one of the supported languages (such as Java) with your favourite unit testing framework.

- The WebDriver API is now a W3C standard, and several implementations of it (other than Selenium) exist, such as WebDriverIO and Cypress.